NTU Engineering Innovation and Design Competition

The EID competition, organized by NTU provided a unique opportunity for engineering undergrads to apply our knowledge to real-life problems.

This competition was an ideal platform for us emerging engineers to leverage our skills and education to address practical issues. It was a chance to translate theory into practice and make a tangible impact.

our mission

Our group wanted to use our skills and this opportunity to give back to society. With AR becoming a hot topic, especially with products like Apple Vision, we aimed to leverage this technology to benefit those who truly need it. After much research, we chose to focus on the visually impaired.

Visually Impaired

Navigating the world with impaired vision presents significant challenges. Traditional mobility aids often fall short, leaving users feeling dependent and vulnerable. This lack of autonomy hinders their ability to engage in daily activities and diminishes their quality of life. We identified a critical need for a solution that integrates advanced technology into everyday life, empowering users to navigate with confidence.

Our research concluded that our target community often face these challenges:

Reading Text

The world is catered to those with vision. As such, not every sign or item has braille on them so help the visually impaired.

Object Identification

For the visually impaired, identifying objects often requires touch, which can be dangerous with sharp, hot, or hazardous items. They frequently need to ask for help to avoid injury.

Obstacle detection

Visually impaired individuals face significant challenges with obstacle detection, risking injury from unseen hazards like sharp edges, hot surfaces, or uneven terrain.

To empower the visually impaired with greater independence, safety and accessibility in their daily lives

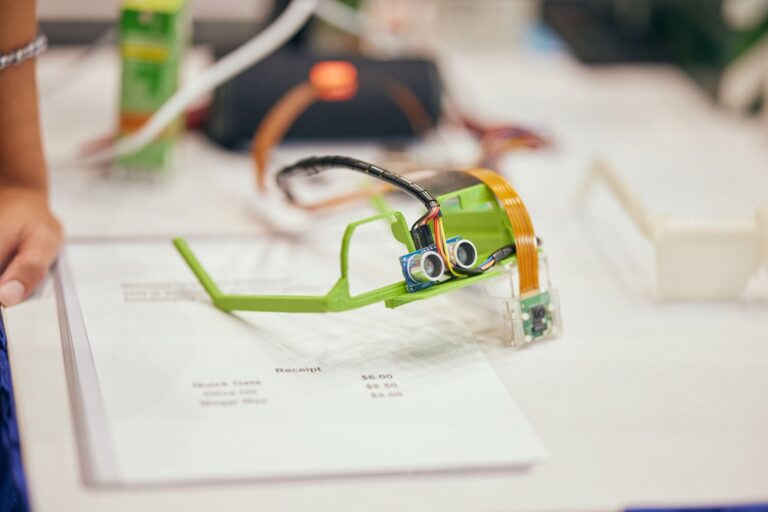

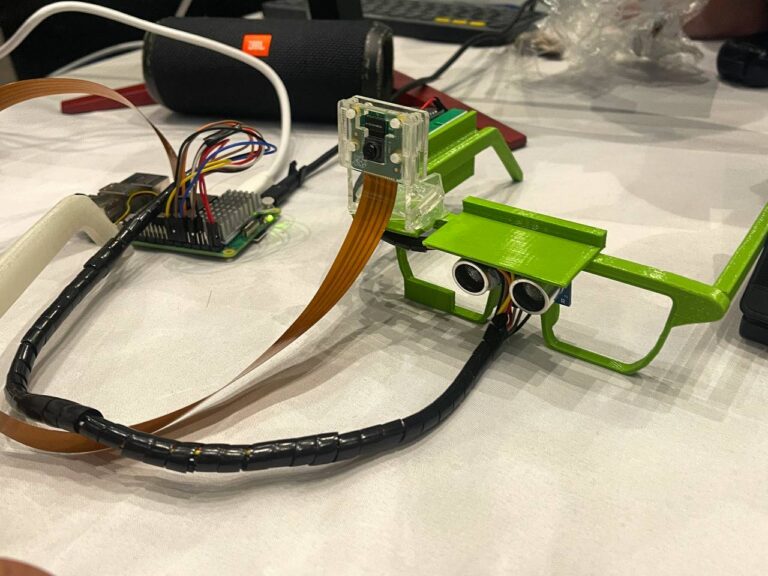

audioSight Glasses

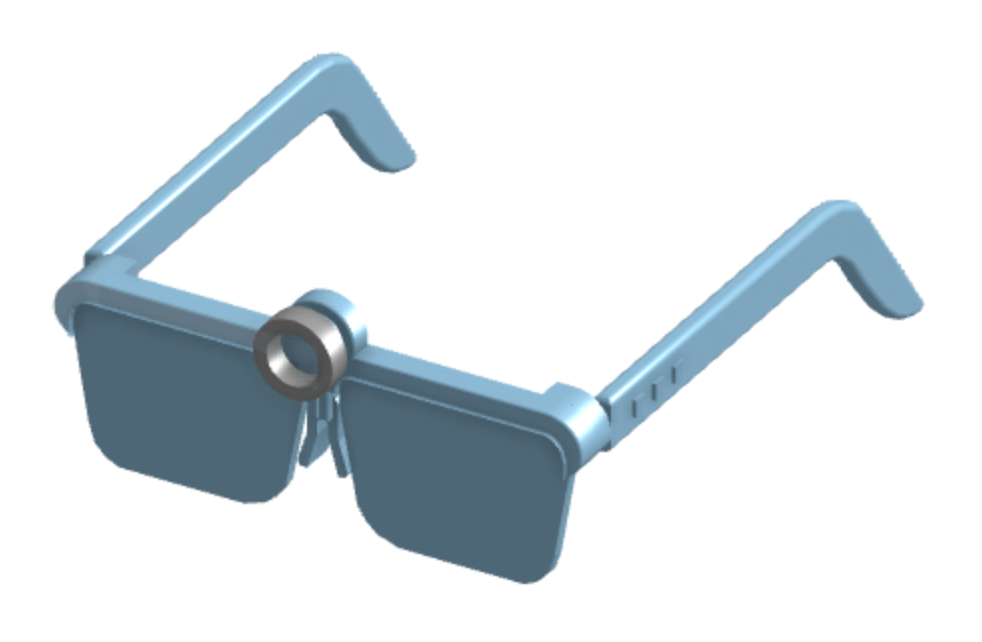

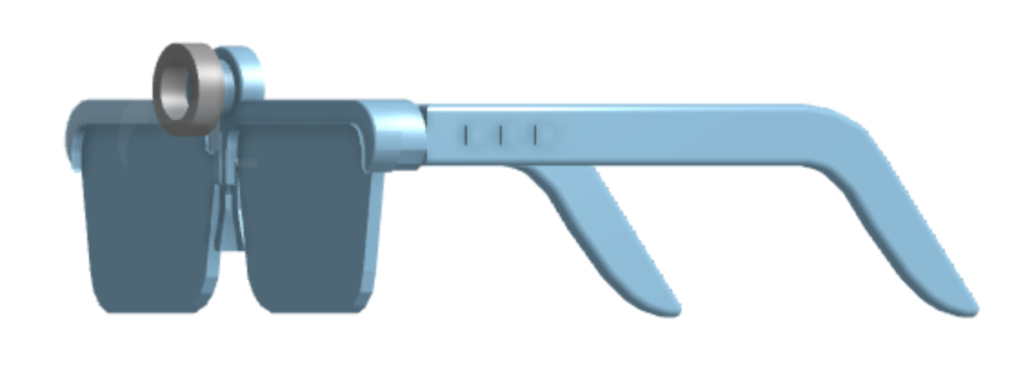

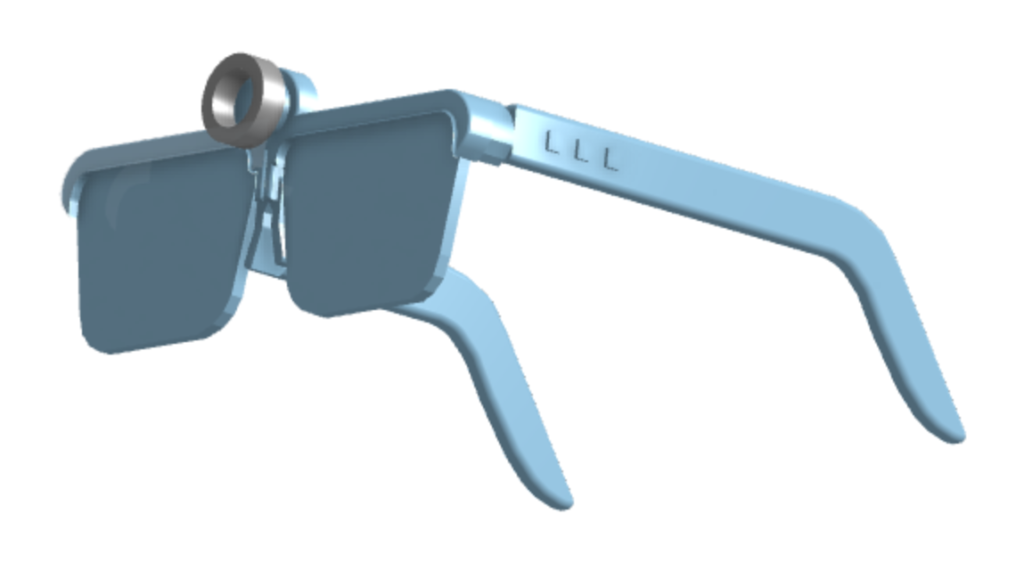

Introducing, our award-winning solution, AudioSight Glasses. Our innovative glasses are equipped with a centrally mounted camera and an ultrasonic sensor, enabling advanced obstacle sensing, text-to-speech functionality, and object detection. Users can effortlessly cycle through these features using tactile physical buttons conveniently mounted on the side of the frame, providing essential sensory feedback for intuitive use.

Integrated small speakers discreetly located on the sides of the glasses deliver audio cues and messages, allowing users to receive assistance without drawing unwanted attention to their visual impairment.

This thoughtful and unobtrusive design empowers users to navigate social situations with confidence, making them feel more comfortable and self-assured in diverse environments.

Redefining accessibility for the visually impaired through advanced sensors and audio feedback to enhance independence, safety, and quality of life.

key Features

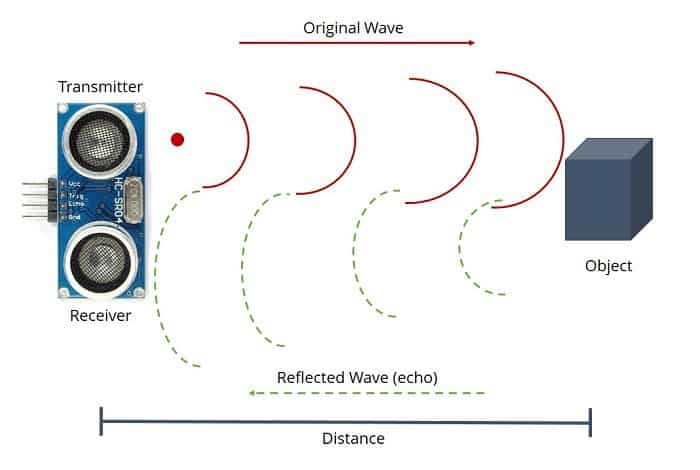

Proximity Alerts

Our ultrasonic sensor detects the distance between the user and objects by using sound waves, producing different tones based on proximity. The closer the object, the higher the pitch.

Optical Character Recognition (OCR)

OCR technology extracts text from images captured by the Raspberry Pi camera. This text is then converted into machine-readable format, making printed or handwritten text accessible to users.

Object Detection

The object detection feature identifies objects using the Camera Module and reads out the objects detected, helping users recognize their environment.

Text-to-speech

Our TTS feature converts text into spoken words. This integration of OCR and TTS enables the conversion of text from images into speech, assisting visually impaired users in identifying and understanding text content.

Form factor

The design we came up with prioritises discretion and convenience while seamlessly integrating assistive technology into a sleek and compact form factor. Our assistive glasses seamlessly integrate into everyday life because glasses are already widely accepted and used by millions of people. This familiarity makes the transition to assistive glasses both seamless and comfortable. As a daily essential, glasses provide a convenient platform for incorporating advanced assistive technologies, allowing users to benefit from enhanced functionality without the need to adapt to a completely new device.

Camera

A centrally mounted camera and an ultrasonic sensor enables advanced obstacle sensing, text-to-speech functionality and object detection.

Optimal Position

Features like text-to-speech, object recognition, and navigation aids can be directly aligned with the user’s line of sight and ears, improving functionality and ease of use.

Ergonomics

Glasses are designed to be worn comfortably for extended periods, distributing weight and minimizing discomfort, which is crucial for assistive technology intended for all-day use.

Discrete

Society is accustomed to seeing individuals wear glasses, so assistive devices in this form do not attract unwanted attention or stigma. Integrated small speakers provide audio cues and messages, allowing users to access assistance discreetly without drawing attention to their visual impairment.

How does it all work?

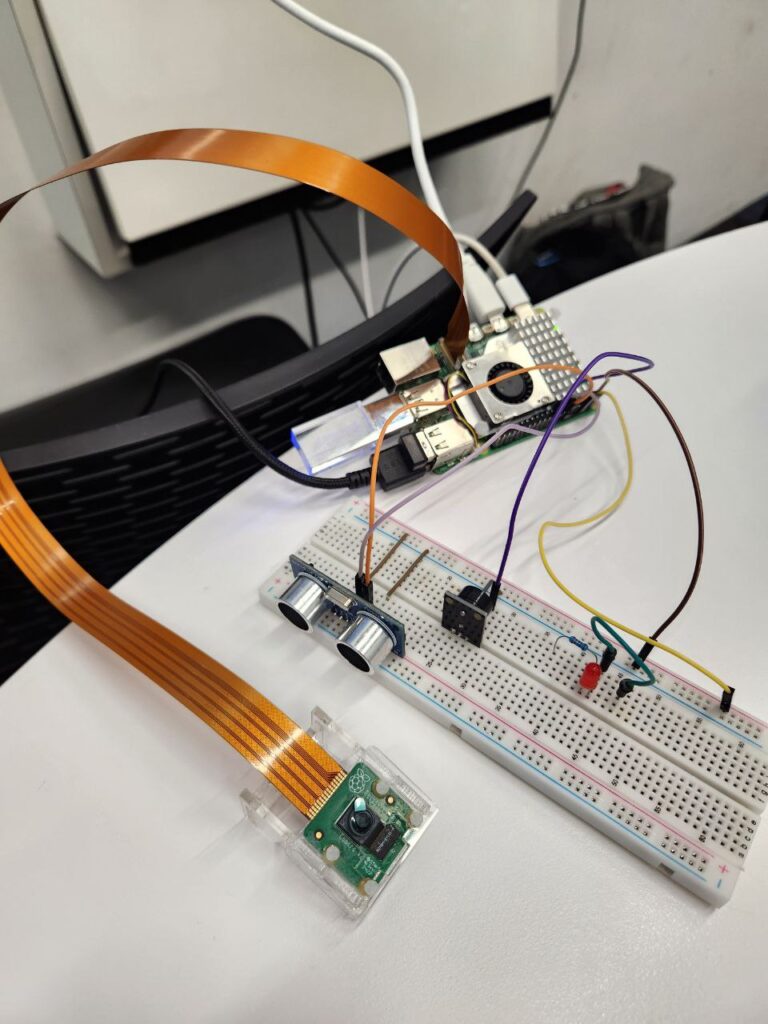

Ultrasonic Sensor

An ultrasonic sensor is used to detect the distance between the user and objects in front of them by using sound waves. By using the speed of sound and the time taken for the sound wave to be produced and received, we can determine the distance. Different tones will be produced depending on the distance of the object. The closer the user is to the object, the higher the pitch. This range of distances can be adjusted within our code.

Optical Character Recognition (OCR)

Optical Character Recognition (OCR) involves utilising software libraries and tools to extract text from images or documents captured by our Raspberry Pi camera module V2.

OCR technology allows our Raspberry Pi 5 to recognize printed or handwritten text within images and convert it into machine-readable text. Some of the libraries we used are: pytesseract, Open-CV and Tesseract.

1. Image Aquisition

Activate the camera and take an image of a text you want to convert to computer-readable words.

2. Preprocessing

Image is cleaned before it is passed to the next step. This includes converting the image to grayscale, aligning document and reducing noise to differentiate text from the background.

3. Character recognition

OCR algorithm isolates individual character image and matches it to a library of known characters. Lines and shapes are also compared to stored character shapes to find closest match.

4. Conversion

OCR converts characters into machine-readable text a save them into a temporary file which can be accessed to serve multiple purposes.

1. Text Input

We supply the algorithm with the text we want to convert to speech

2. Text Preprocessing

The text is analyzed for linguistic structure, including pronunciation, intonation, and prosody (rhythm, stress, and intonation of speech).

3. Synthesis

The text is broken down into phonemes, the basic units of sound in speech. The phonemes are converted into audio signals. This is done using pre-recorded human speech segments or by generating the sound using algorithms.

4. Audio Output

The synthesized speech is saved in a temporary mp3 file and played back through speakers allowing the user to hear the spoken text.

Text-To-Speech

Our text-to-speech uses an algorithm from Google called gTTS. Google gTTS is a Python library and tool that interfaces with Google Translate’s text-to-speech API. It allows us to convert text into spoken words.

To assist individuals with visual impairments, we decided to combine Optical Character Recognition (OCR) with text-to-speech (TTS) functionality. This integration aims to convert text from images into spoken words (image to speech), facilitating easier identification of text for the blind.

By having this combined function, we are able to empower individuals with visual impairments to effectively identify and comprehend text content captured by the Raspberry Pi’s camera module V2, enhancing their accessibility and independence.

Prototype

Our prototype differs significantly in appearance from the final product due to the integration of large, bulky electronics, necessitated by budget constraints. Using smaller components would have exceeded our $300 limit. Consequently, the Raspberry Pi 5 processor couldn’t be mounted on the glasses and required a wired connection to the main unit. The final product will seamlessly integrate all components into the glasses.

The high power demand of the Raspberry Pi 5 meant the prototype had to run on a power supply during the competition, as a battery would drain too quickly. Future versions will address this by making the product battery-operated.

Despite these differences, our prototype served as a proof of concept. We successfully developed all intended functionalities and effectively addressed the pain points of our users. Future development will involve microscaling the electronic components and refining the design for a more streamlined and integrated final product.

Pitching Our Project

A Collaborative success

Pitching our project to a diverse panel of judges and the public was a pivotal challenge we embraced with strategic preparation and teamwork. Instead of relying on a single presenter, we leveraged the collective expertise of our team. Each member pitched their specific contributions, showcasing our cohesive teamwork and deep understanding of our project.

We brought our product to life with diagrams, slides, live demonstrations, and clear explanations. This dynamic approach highlighted our innovation and made our presentation engaging and accessible for all judges, regardless of their technical background.

We emphasized how our solution addressed a critical need, highlighting its innovative features and practical benefits. Our tagline, “Restoring vision to our audience,” resonated strongly with the judges, capturing the essence of our mission and goals.

Our pitch distinguished our project and secured recognition, exemplifying our ability to innovate, collaborate effectively, and communicate ideas persuasively. This experience underscored our dedication and capability in engineering solutions that make a real difference.

Reclaiming vision, Renewing Hope, Transforming Lives

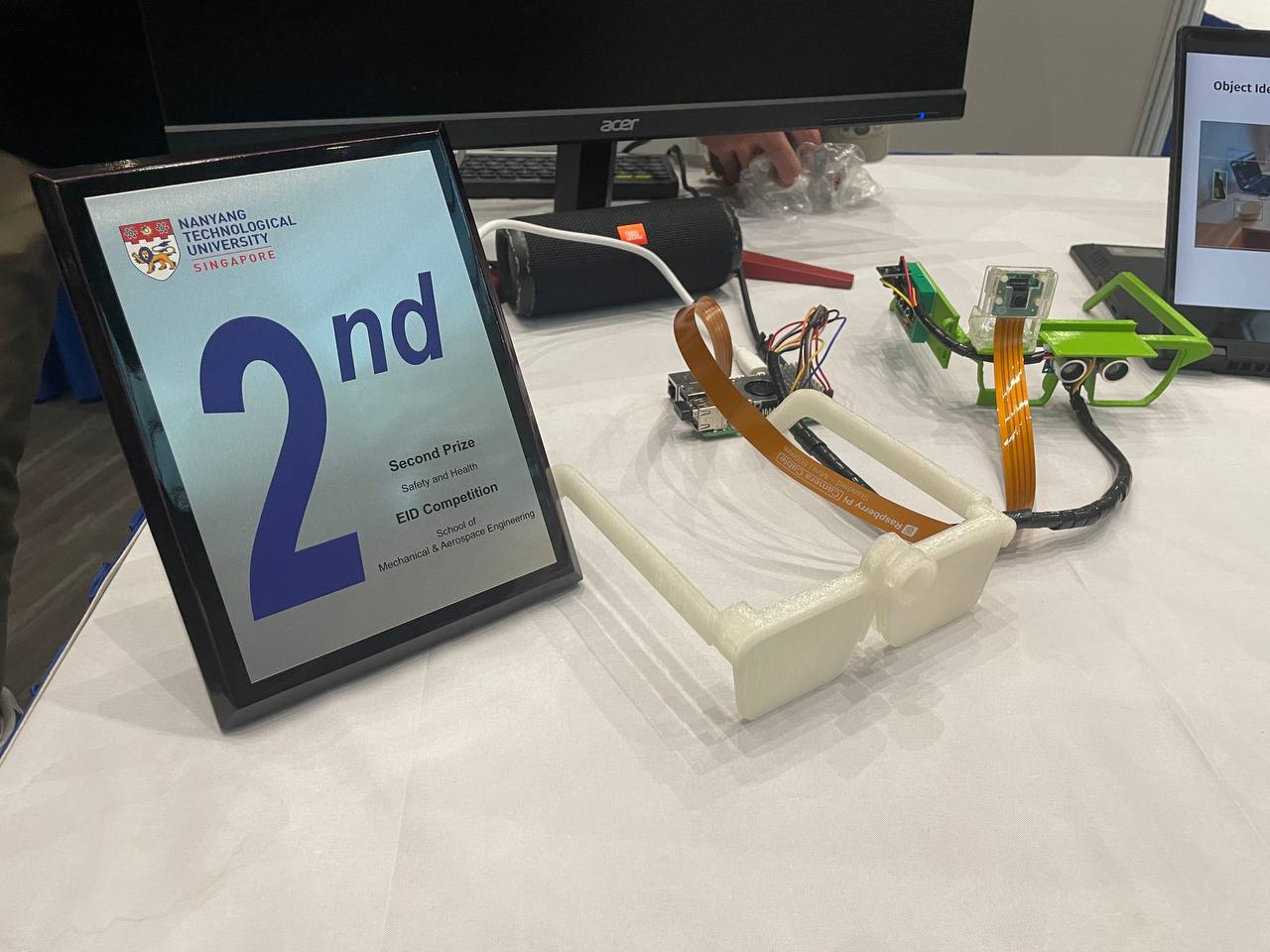

results

Our hard work and effective communication strategies paid off, leading to impressive results. We successfully completed our project on time and within the budget, delivering a solution that exceeded expectations. Overall, the project was a resounding success, reflecting our team’s dedication, ingenuity, and ability to work collaboratively towards a common goal. Despite not securing first place, the experience was invaluable, and the recognition we received affirmed the quality and impact of our work.

Securing 2nd Place

Our project was awarded second place in the EID competition, a testament to the innovative approach and thorough execution by our team. The judges particularly appreciated our problem-solving skills, the practical applicability of our solution, and our ability to communicate our ideas clearly and effectively.

Positive Feedback

The feedback from both the mentors and judges was overwhelmingly positive. They commended our team for our thorough research, detailed planning, and seamless execution. Our ability to communicate complex technical information in a clear and understandable manner was particularly highlighted.

Functional Prototype

We were able to develop a fully functional prototype that met all the competition’s requirements, budget and time constraints. Despite the initial setback with the Raspberry Pi 5, we managed to adapt and deliver a robust and efficient solution. This prototype not only demonstrated our technical skills but also showcased our ability to overcome challenges and innovate under pressure.

Team Growth and Development

Beyond the tangible outcomes, the project significantly contributed to our personal and professional growth. We enhanced our technical skills, developed effective communication strategies, and learned valuable lessons in leadership and teamwork. This experience has prepared us for future engineering challenges and equipped us with the skills necessary for successful careers.